177 results on '"Rudolf Koch"'

Search Results

2. Automatic Interpretation of Multispectral Aerial Photographs

- Author

-

Karl-Rudolf Koch

- Subjects

Interpretação ,Automática ,Fotografias Aéreas ,Multiespectral ,Geography. Anthropology. Recreation ,Cartography ,GA101-1776 - Published

- 2020

3. A configurable cascaded continuous-time ΔΣ modulator with up to 15MHz bandwidth.

- Author

-

Jens Sauerbrey, Jacinto San Pablo Garcia, Georgi Panov, Thomas Piorek, Xianghua Shen, Markus Schimper, Rudolf Koch, Matthias Keller, Yiannos Manoli, and Maurits Ortmanns

- Published

- 2010

- Full Text

- View/download PDF

4. A Fully Integrated SoC for GSM/GPRS in 0.13µm CMOS.

- Author

-

Pierre-Henri Bonnaud, Markus Hammes, Andre Hanke, Jens Kissing, Rudolf Koch, Eric Labarre, and Christoph Schwoerer

- Published

- 2006

- Full Text

- View/download PDF

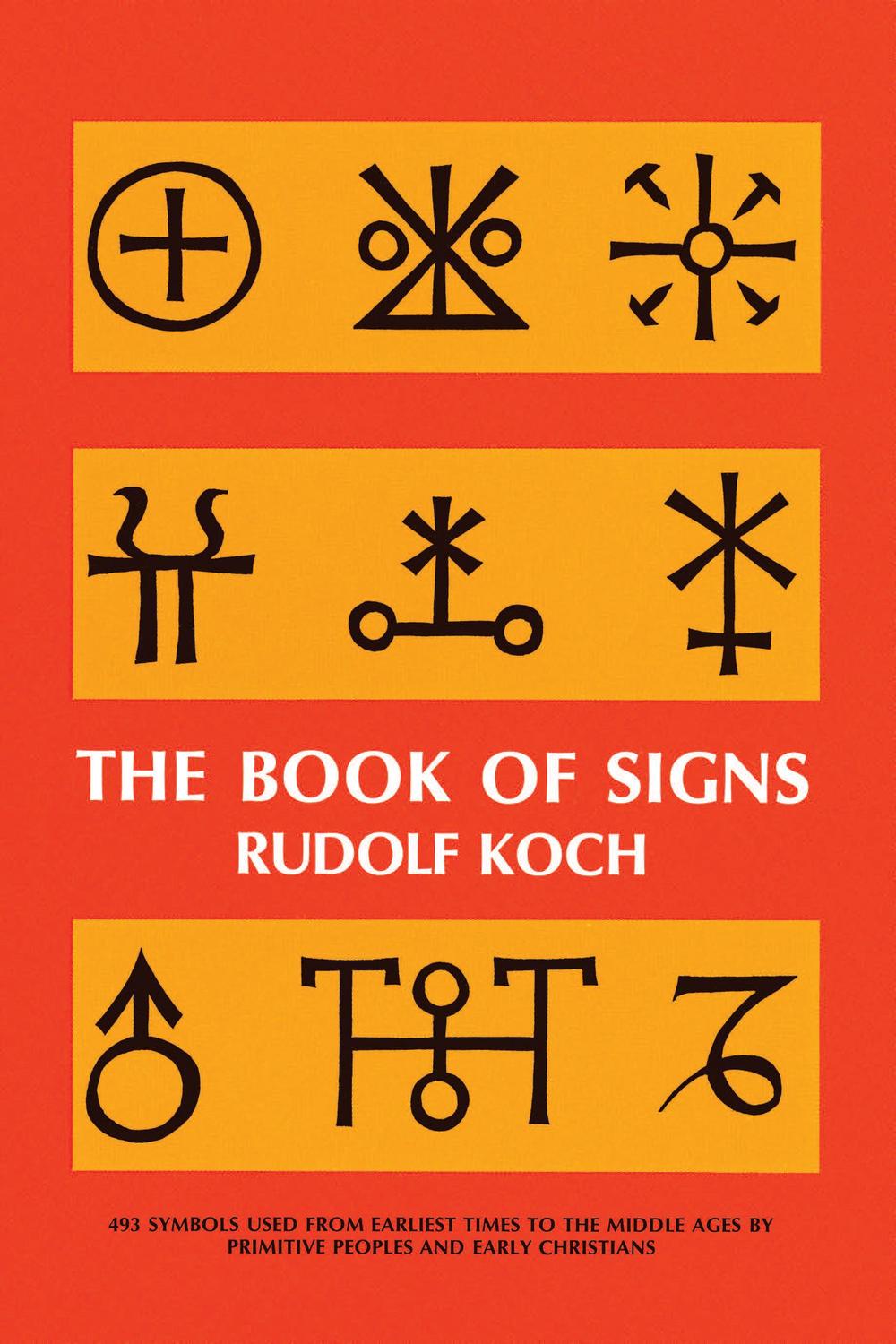

5. The Book of Signs

- Author

-

Rudolf Koch

- Published

- 2013

6. Green Electronics: Environmental Impacts, Power, E-Waste.

- Author

-

Jan Sevenhans and Rudolf Koch

- Published

- 2008

- Full Text

- View/download PDF

7. Bayesian statistics and Monte Carlo methods

- Author

-

Karl-Rudolf Koch

- Subjects

010504 meteorology & atmospheric sciences ,Computer science ,probability ,Monte Carlo method ,0603 philosophy, ethics and religion ,01 natural sciences ,Statistics ,Earth and Planetary Sciences (miscellaneous) ,Computers in Earth Sciences ,0105 earth and related environmental sciences ,Statistical hypothesis testing ,Confidence region ,Propagation of uncertainty ,QB275-343 ,Applied Mathematics ,Astronomy and Astrophysics ,06 humanities and the arts ,confidence region ,Bayesian statistics ,random variable ,Geophysics ,univariate and multivariate distributions ,060302 philosophy ,error propagation,hypothesis test ,Random variable ,Geodesy - Abstract

The Bayesian approach allows an intuitive way to derive the methods of statistics. Probability is defined as a measure of the plausibility of statements or propositions. Three rules are sufficient to obtain the laws of probability. If the statements refer to the numerical values of variables, the so-called random variables, univariate and multivariate distributions follow. They lead to the point estimation by which unknown quantities, i.e. unknown parameters, are computed from measurements. The unknown parameters are random variables, they are fixed quantities in traditional statistics which is not founded on Bayes’ theorem. Bayesian statistics therefore recommends itself for Monte Carlo methods, which generate random variates from given distributions. Monte Carlo methods, of course, can also be applied in traditional statistics. The unknown parameters, are introduced as functions of the measurements, and the Monte Carlo methods give the covariance matrix and the expectation of these functions. A confidence region is derived where the unknown parameters are situated with a given probability. Following a method of traditional statistics, hypotheses are tested by determining whether a value for an unknown parameter lies inside or outside the confidence region. The error propagation of a random vector by the Monte Carlo methods is presented as an application. If the random vector results from a nonlinearly transformed vector, its covariance matrix and its expectation follow from the Monte Carlo estimate. This saves a considerable amount of derivatives to be computed, and errors of the linearization are avoided. The Monte Carlo method is therefore efficient. If the functions of the measurements are given by a sum of two or more random vectors with different multivariate distributions, the resulting distribution is generally not known. TheMonte Carlo methods are then needed to obtain the covariance matrix and the expectation of the sum.

- Published

- 2018

8. A 1.544-Mb/s CMOS line driver for a 22.8- Omega load.

- Author

-

H. Herrmann and Rudolf Koch

- Published

- 1990

- Full Text

- View/download PDF

9. Survival strategies for mixed-signal systems-on-chip (panel session).

- Author

-

Stephan Ohr, Rob A. Rutenbar, Henry Chang, Georges G. E. Gielen, Rudolf Koch, Roy McGuffin, and K. C. Murphy

- Published

- 2000

- Full Text

- View/download PDF

10. Monte Carlo Methods

- Author

-

Karl-Rudolf Koch

- Subjects

010504 meteorology & atmospheric sciences ,010502 geochemistry & geophysics ,01 natural sciences ,0105 earth and related environmental sciences - Published

- 2020

- Full Text

- View/download PDF

11. Handbook of mathematical geodesy-functional analytic and potential theoretic methods

- Author

-

Karl-Rudolf Koch

- Subjects

Management science ,Computer science ,Modeling and Simulation ,General Earth and Planetary Sciences ,Computational Science and Engineering - Published

- 2019

- Full Text

- View/download PDF

12. Expectation Maximization algorithm and its minimal detectable outliers

- Author

-

Karl-Rudolf Koch

- Subjects

010504 meteorology & atmospheric sciences ,Monte Carlo method ,T distribution ,010502 geochemistry & geophysics ,01 natural sciences ,Geophysics ,Geochemistry and Petrology ,Outlier ,Expectation–maximization algorithm ,Statistics ,Mean-shift ,0105 earth and related environmental sciences ,Mathematics ,Statistical hypothesis testing - Abstract

Minimal Detectable Biases (MDBs) or Minimal Detectable Outliers for the Expectation Maximization (EM) algorithm based on the variance-inflation and the mean-shift model are determined for an example. A Monte Carlo method is applied with no outlier and with one, two and three randomly chosen outliers. The outliers introduced are recovered and the corresponding MDBs are almost independent from the number of outliers. The results are compared to the MDB derived earlier by the author. This MDB approximately agrees with the MDB for one outlier of the EM algorithm. The MDBs for two and three outliers are considerably larger than MDBs of the EM algorithm.

- Published

- 2016

- Full Text

- View/download PDF

13. Artificial intelligence for determining systematic effects of laser scanners

- Author

-

Jan Martin Brockmann and Karl-Rudolf Koch

- Subjects

Series (mathematics) ,Laser scanning ,business.industry ,Ergodicity ,Coordinate system ,010103 numerical & computational mathematics ,010502 geochemistry & geophysics ,01 natural sciences ,Standard deviation ,Loop (topology) ,Modeling and Simulation ,General Earth and Planetary Sciences ,Artificial intelligence ,0101 mathematics ,business ,Inner loop ,Realization (probability) ,0105 earth and related environmental sciences ,Mathematics - Abstract

Artificial intelligence is interpreted by a machine learning algorithm. Its realization is applied for a two-dimensional grid of points and depends on six parameters which determine the limits of loops. The outer loop defines the width of the grid, the most inner loop the number of scans, which result from the three-dimensional coordinate system for the $$x_i$$-, $$y_i$$-, $$z_i$$-coordinates of a laser scanner. The $$y_i$$-coordinates approximate the distances measured by the laser scanner. The minimal standard deviations of the measurements distorted by systematic effects for the $$y_i$$-coordinates are computed by the Monte Carlo estimate of Sect. 6. The minimum of these minimal standard deviations is found in the grid of points by the machine learning algorithm and used to judge the outcome. Two results are given in Sect. 7. They differ by the widths of the grid and show that only for precise applications the systematic effects of the laser scanner have to be taken care of. Instead of assuming a standard deviation for the systematic effects from prior information as mentioned in Sect. 1, the $$x_i$$-, $$y_i$$-, $$z_i$$-coordinates are repeatedly measured by the laser scanner. However, there are too few repetitions to fulfill the conditions of the multivariate model of Sect. 2 for all measured coordinates. The variances of the measurements plus systematic effects computed by the Monte Carlo estimate of Sect. 6 can therefore be obtained for a restricted number of points only. This number is computed by random variates. For two numbers, the variations of the standard deviations of the $$y_i$$-coordinates, the variations of the standard deviations of the $$x_i$$-, $$y_i$$-, $$z_i$$-coordinates from the multivariate model, the variations of the standard deviations of the systematic effects and the variations of the confidence intervals are presented. The repeated measurements define time series whose auto- and cross-correlation functions are applied as correlations for the systematic effects of the measurements. The ergodicity of the time series is shown.

- Published

- 2019

- Full Text

- View/download PDF

14. Minimal detectable outliers as measures of reliability

- Author

-

Karl-Rudolf Koch

- Subjects

Grubbs' test for outliers ,Geophysics ,Geochemistry and Petrology ,Estimation theory ,Statistics ,Outlier ,Linear model ,Geodetic datum ,Function (mathematics) ,Computers in Earth Sciences ,Reliability (statistics) ,Statistical hypothesis testing ,Mathematics - Abstract

The concept of reliability was introduced into geodesy by Baarda (A testing procedure for use in geodetic networks. Publications on Geodesy, vol. 2. Netherlands Geodetic Commission, Delft, 1968). It gives a measure for the ability of a parameter estimation to detect outliers and leads in case of one outlier to the MDB, the minimal detectable bias or outlier. The MDB depends on the non-centrality parameter of the \(\chi ^2\)-distribution, as the variance factor of the linear model is assumed to be known, on the size of the outlier test of an individual observation which is set to 0.001 and on the power of the test which is generally chosen to be 0.80. Starting from an estimated variance factor, the \(F\)-distribution is applied here. Furthermore, the size of the test of the individual observation is a function of the number of outliers to keep the size of the test of all observations constant, say 0.05. The power of the test is set to 0.80. The MDBs for multiple outliers are derived here under these assumptions. The method is applied to the reconstruction of a bell-shaped surface measured by a laser scanner. The MDBs are introduced as outliers for the alternative hypotheses of the outlier tests. A Monte Carlo method reveals that due to the way of introducing the outliers, the false null hypotheses cannot be rejected on the average with a power of 0.80 if the MDBs are not enlarged by a factor.

- Published

- 2015

- Full Text

- View/download PDF

15. Robust estimations for the nonlinear Gauss Helmert model by the expectation maximization algorithm

- Author

-

Karl-Rudolf Koch

- Subjects

Polynomial ,Mathematical optimization ,Gauss ,Linear model ,Mixture model ,Nonlinear system ,Geophysics ,Geochemistry and Petrology ,Linearization ,Homoscedasticity ,Expectation–maximization algorithm ,Applied mathematics ,Computers in Earth Sciences ,Mathematics - Abstract

For deriving the robust estimation by the EM (expectation maximization) algorithm for a model, which is more general than the linear model, the nonlinear Gauss Helmert (GH) model is chosen. It contains the errors-in-variables model as a special case. The nonlinear GH model is difficult to handle because of the linearization and the Gauss Newton iterations. Approximate values for the observations have to be introduced for the linearization. Robust estimates by the EM algorithm based on the variance-inflation model and the mean-shift model have been derived for the linear model in case of homoscedasticity. To derive these two EM algorithms for the GH model, different variances are introduced for the observations and the expectations of the measurements defined by the linear model are replaced by the ones of the GH model. The two robust methods are applied to fit by the GH model a polynomial surface of second degree to the measured three-dimensional coordinates of a laser scanner. This results in detecting more outliers than by the linear model.

- Published

- 2013

- Full Text

- View/download PDF

16. Robust estimation by expectation maximization algorithm

- Author

-

Karl-Rudolf Koch

- Subjects

Linear model ,Expected value ,Mixture model ,Normal distribution ,Geophysics ,Geochemistry and Petrology ,Statistics ,Expectation–maximization algorithm ,Outlier ,Computers in Earth Sciences ,Likelihood function ,Algorithm ,Scale parameter ,Mathematics - Abstract

A mixture of normal distributions is assumed for the observations of a linear model. The first component of the mixture represents the measurements without gross errors, while each of the remaining components gives the distribution for an outlier. Missing data are introduced to deliver the information as to which observation belongs to which component. The unknown location parameters and the unknown scale parameter of the linear model are estimated by the EM algorithm, which is iteratively applied. The E (expectation) step of the algorithm determines the expected value of the likelihood function given the observations and the current estimate of the unknown parameters, while the M (maximization) step computes new estimates by maximizing the expectation of the likelihood function. In comparison to Huber’s M-estimation, the EM algorithm does not only identify outliers by introducing small weights for large residuals but also estimates the outliers. They can be corrected by the parameters of the linear model freed from the distortions by gross errors. Monte Carlo methods with random variates from the normal distribution then give expectations, variances, covariances and confidence regions for functions of the parameters estimated by taking care of the outliers. The method is demonstrated by the analysis of measurements with gross errors of a laser scanner.

- Published

- 2012

- Full Text

- View/download PDF

17. Optimal regularization for geopotential model GOCO02S by Monte Carlo methods and multi-scale representation of density anomalies

- Author

-

Jan Martin Brockmann, Wolf-Dieter Schuh, and Karl-Rudolf Koch

- Subjects

Geopotential ,Monte Carlo method ,Spherical harmonics ,Contrast (statistics) ,Harmonic (mathematics) ,Geometry ,Regularization (mathematics) ,Geophysics ,Wavelet ,Geochemistry and Petrology ,Applied mathematics ,Geopotential model ,Computers in Earth Sciences ,Mathematics - Abstract

GOCO02S is a combined satellite-only geopotential model, regularized from degrees 180 to 250 of the expansion into spherical harmonics. To investigate the start of the regularization, the normal equations of GOCO02S have been used to compute additional geopotential models by regularizations beginning at degrees 160, 200, 220 and with no regularization. Three different methods are applied to determine where to start the regularization. The simplest one considers the decrease of the degree variances of the not regularized solution. The second one tests for the same solution the hypothesis that the square root of the degree variance is equal to the value computed by the estimated harmonic coefficients. If the hypothesis has to be rejected for a certain degree, the error degree variance is so large that the estimated harmonic coefficients cannot be trusted anymore so that the regularization has to start at that degree. The third method uses the density anomalies by which the disturbing potential is caused resulting from the geopotential model. The density anomalies are well suited to visualize the effects of the higher degree harmonics. In contrast to the base functions of the harmonic coefficients with global support, the density anomalies are expressed by a B-spline surface with local support. Multi-scale representations were applied and the hypotheses tested that the wavelet coefficients are equal to zero. Accepting the hypotheses means that nonsignificant wavelet coefficients were determined which lead to nonsignificant density anomalies. By comparing these anomalies for different regularizations, the degree where to start the regularization is determined. It turns out that beginning the regularization at degree 180, as was done for GOCO02S, is a correct choice.

- Published

- 2012

- Full Text

- View/download PDF

18. Digital Images with 3D Geometry from Data Compression by Multi-scale Representations of B-Spline Surfaces

- Author

-

Karl-Rudolf Koch

- Subjects

Laser scanning ,Scale (ratio) ,Computer science ,Applied Mathematics ,B-spline ,Astronomy and Astrophysics ,Geometry ,3d model ,Digital image ,Geophysics ,Computer graphics (images) ,Earth and Planetary Sciences (miscellaneous) ,Digital geometry ,3d geometry ,Computers in Earth Sciences ,Data compression - Abstract

Digital Images with 3D Geometry from Data Compression by Multi-scale Representations of B-Spline SurfacesTo build up a 3D (three-dimensional) model of the surface of an object, the heights of points on the surface are measured, for instance, by a laser scanner. The intensities of the reflected laser beam of the points can be used to visualize the 3D model as range image. It is proposed here to fit a two-dimensional B-spline surface to the measured heights and intensities by the lofting method. To fully use the geometric information of the laser scanning, points on the fitted surface with their intensities are computed with a density higher than that of the measurements. This gives a 3D model of high resolution which is visualized by the intensities of the points on the B-spline surface. For a realistic view of the 3D model, the coordinates of a digital photo of the object are transformed to the coordinate system of the 3D model so that the points get the colors of the digital image. To efficiently compute and store the 3D model, data compression is applied. It is derived from the multi-scale representation of the dense grid of points on the B-spline surface. The proposed method is demonstrated for an example.

- Published

- 2011

- Full Text

- View/download PDF

19. N-dimensional B-spline surface estimated by lofting for locally improving IRI

- Author

-

Michael Schmidt and Karl-Rudolf Koch

- Subjects

Surface (mathematics) ,Geophysics ,N dimensional ,Applied Mathematics ,B-spline ,Earth and Planetary Sciences (miscellaneous) ,Astronomy and Astrophysics ,Geometry ,Computers in Earth Sciences ,Geology ,Lofting - Abstract

N-dimensional B-spline surface estimated by lofting for locally improving IRIN-dimensional surfaces are defined by the tensor product of B-spline basis functions. To estimate the unknown control points of these B-spline surfaces, the lofting method also called skinning method by cross-sectional curve fits is applied. It is shown by an analytical proof and numerically confirmed by the example of a four-dimensional surface that the results of the lofting method agree with the ones of the simultaneous estimation of the unknown control points. The numerical complexity for estimating vn control points by the lofting method is O(vn+1) while it results in O(v3n) for the simultaneous estimation. It is also shown that a B-spline surface estimated by a simultaneous estimation can be extended to higher dimensions by the lofting method, thus saving computer time.An application of this method is the local improvement of the International Reference Ionosphere (IRI), e.g. by the slant total electron content (STEC) obtained by dual-frequency observations of the Global Navigation Satellite System (GNSS). Three-dimensional B-spline surfaces at different time epochs have to be determined by the simultaneous estimation of the control points for this improvement. A four-dimensional representation in space and time of the electron density of the ionosphere is desirable. It can be obtained by the lofting method. This takes less computer time than determining the four-dimensional surface solely by a simultaneous estimation.

- Published

- 2011

- Full Text

- View/download PDF

20. Data compression by multi-scale representation of signals

- Author

-

Karl-Rudolf Koch

- Subjects

Scale (ratio) ,business.industry ,Computer science ,Modeling and Simulation ,Earth and Planetary Sciences (miscellaneous) ,Representation (systemics) ,Pattern recognition ,Pyramid (image processing) ,Artificial intelligence ,business ,Engineering (miscellaneous) ,Data compression ,Image compression - Published

- 2011

- Full Text

- View/download PDF

21. The dynamic locking screw (DLS) can increase interfragmentary motion on the near cortex of locked plating constructs by reducing the axial stiffness

- Author

-

Martin Lucke, Andreas K. Nussler, Ulrich Stöckle, Carsten Horn, Daniel Andermatt, Andreas Lenich, Rudolf Koch, Stefan Eichhorn, Stefan Döbele, Rainer Burgkart, and Arne Buchholtz

- Subjects

Fracture Healing ,medicine.medical_specialty ,business.industry ,Bone Screws ,Stiffness ,Motion (geometry) ,Locked plating ,Bending ,Models, Biological ,Biomechanical Phenomena ,Surgery ,Tibial Fractures ,Fracture Fixation, Internal ,Fractures, Bone ,Plate osteosynthesis ,Bending stiffness ,Bone plate ,Fracture fixation ,medicine ,Humans ,medicine.symptom ,Composite material ,business ,Bone Plates - Abstract

The plate-screw interface of an angular stable plate osteosynthesis is very rigid. So far, all attempts to decrease the stiffness of locked plating construct, e.g. the bridged plate technique, decrease primarily the bending stiffness. Thus, the interfragmentary motion increases only on the far cortical side by bending the plate. To solve this problem, the dynamic locking screw (DLS) was developed.Comparison tests were performed with locking screws (LS) and DLS. Axial stiffness, bending stiffness and interfragmentary motion were compared. For measurements, we used a simplified transverse fracture model, consisting of POM C and an 11-hole LCP3.5 with a fracture gap of 3 mm. Three-dimensional fracture motion was detected using an optical measurement device (PONTOS 5 M/GOM) consisting of two CCD cameras (2,448 x 2,048 pixel) observing passive markers.The DLS reduced the axial stiffness by approximately 16% while increasing the interfragmentary motion at the near cortical side significantly from 282 microm (LS) to 423 microm (DLS) applying an axial load of 150 N.The use of DLS reduces the stiffness of the plate-screw interface and thus increases the interfragmentary motion at the near cortical side without altering the advantages of angular stability and the strength.

- Published

- 2010

- Full Text

- View/download PDF

22. Approximating covariance matrices estimated in multivariate models by estimated auto- and cross-covariances

- Author

-

Heiner Kuhlmann, Wolf-Dieter Schuh, and Karl-Rudolf Koch

- Subjects

Covariance function ,Covariance matrix ,Covariance ,Estimation of covariance matrices ,Geophysics ,Matérn covariance function ,Geochemistry and Petrology ,Scatter matrix ,Statistics ,Law of total covariance ,Statistics::Methodology ,Applied mathematics ,Rational quadratic covariance function ,Computers in Earth Sciences ,Mathematics - Abstract

Quantities like tropospheric zenith delays or station coordinates are repeatedly measured at permanent VLBI or GPS stations so that time series for the quantities at each station are obtained. The covariances of these quantities can be estimated in a multivariate linear model. The covariances are needed for computing uncertainties of results derived from these quantities. The covariance matrix for many permanent stations becomes large, the need for simplifying it may therefore arise under the condition that the uncertainties of derived results still agree. This is accomplished by assuming that the different time series of a quantity like the station height for each permanent station can be combined to obtain one time series. The covariance matrix then follows from the estimates of the auto- and cross-covariance functions of the combined time series. A further approximation is found, if compactly supported covariance functions are fitted to an estimated autocovariance function in order to obtain a covariance matrix which is representative of different kinds of measurements. The simplification of a covariance matrix estimated in a multivariate model is investigated here for the coordinates of points of a grid measured repeatedly by a laserscanner. The approximations are checked by determining the uncertainty of the sum of distances to the points of the grid. To obtain a realistic value for this uncertainty, the covariances of the measured coordinates have to be considered. Three different setups of measurements are analyzed and a covariance matrix is found which is representative for all three setups. Covariance matrices for the measurements of laserscanners can therefore be determined in advance without estimating them for each application.

- Published

- 2010

- Full Text

- View/download PDF

23. Identity of simultaneous estimates of control points and of their estimates by the lofting method for NURBS surface fitting

- Author

-

Karl-Rudolf Koch

- Subjects

Reverse engineering ,Surface (mathematics) ,Computational complexity theory ,Mechanical Engineering ,Geometry ,computer.software_genre ,Industrial and Manufacturing Engineering ,Identity (music) ,Square (algebra) ,Computer Science Applications ,Skinning ,Control and Systems Engineering ,Applied mathematics ,Special case ,computer ,Software ,Lofting ,Mathematics - Abstract

For reverse engineering, nonuniform rational B-spline surfaces are fitted to measured coordinates of points. Two methods are often applied. The first one consists of simultaneously estimating the unknown control points of the surface. The second method is a special case of the lofting or skinning method where cross-sectional curves are not interpolated but fitted to the measurements, thus obtaining the control points. This approach is considered to give an approximate solution of the first one. However, it is shown here by an analytical proof and confirmed by a numerical example that both methods give identical results. Since the computational complexity of the first approach is the square of the second one, the simultaneous estimation of a large number of control points should be avoided.

- Published

- 2009

- Full Text

- View/download PDF

24. Systematic Effects in Laser Scanning and Visualization by Confidence Regions

- Author

-

Jan Martin Brockmann and Karl-Rudolf Koch

- Subjects

010504 meteorology & atmospheric sciences ,Laser scanning ,Computer science ,business.industry ,Monte Carlo method ,Probability density function ,01 natural sciences ,Visualization ,010309 optics ,Optics ,Modeling and Simulation ,0103 physical sciences ,Earth and Planetary Sciences (miscellaneous) ,Computer vision ,Artificial intelligence ,business ,Engineering (miscellaneous) ,0105 earth and related environmental sciences ,Confidence region - Abstract

A new method for dealing with systematic effects in laser scanning and visualizing them by confidence regions is derived. The standard deviations of the systematic effects are obtained by repeatedly measuring three-dimensional coordinates by the laser scanner. In addition, autocovariance and cross-covariance functions are computed by the repeated measurements and give the correlations of the systematic effects. The normal distribution for the measurements and the multivariate uniform distribution for the systematic effects are applied to generate random variates for the measurements and random variates for the measurements plus systematic effects. Monte Carlo estimates of the expectations and the covariance matrix of the measurements with systematic effects are computed. The densities for the confidence ellipsoid for the measurements and the confidence region for the measurements with systematic effects are obtained by relative frequencies. They only depend on the size of the rectangular volume elements for which the densities are determined. The problem of sorting the densities is solved by sorting distances together with the densities. This allows a visualization of the confidence ellipsoid for the measurements and the confidence region for the measurements with systematic effects.

- Published

- 2016

- Full Text

- View/download PDF

25. Gibbs sampler by sampling-importance-resampling

- Author

-

Karl-Rudolf Koch

- Subjects

Sampling (statistics) ,Probability density function ,Markov chain Monte Carlo ,02 engineering and technology ,Conditional probability distribution ,01 natural sciences ,Statistics::Computation ,010104 statistics & probability ,symbols.namesake ,Geophysics ,Geochemistry and Petrology ,Resampling ,0202 electrical engineering, electronic engineering, information engineering ,symbols ,020201 artificial intelligence & image processing ,Statistical physics ,0101 mathematics ,Computers in Earth Sciences ,Importance sampling ,Smoothing ,Gibbs sampling ,Mathematics - Abstract

Among the Markov chain Monte Carlo methods, the Gibbs sampler has the advantage that it samples from the conditional distributions for each unknown parameter, thus decomposing the sample space. In the case the conditional distributions are not tractable, the Gibbs sampler by means of sampling-importance-resampling is presented here. It uses the prior density function of a Bayesian analysis as the importance sampling distribution. This leads to a fast convergence of the Gibbs sampler as demonstrated by the smoothing with preserving the edges of 3D images of emission tomography.

- Published

- 2007

- Full Text

- View/download PDF

26. Parameter Estimation and Hypothesis Testing in Linear Models

- Author

-

Karl-Rudolf Koch and Karl-Rudolf Koch

- Subjects

- Parameter estimation, Statistical hypothesis testing, Linear models (Statistics)

- Abstract

The necessity to publish the second edition of this book arose when its third German edition had just been published. This second English edition is there fore a translation of the third German edition of Parameter Estimation and Hypothesis Testing in Linear Models, published in 1997. It differs from the first English edition by the addition of a new chapter on robust estimation of parameters and the deletion of the section on discriminant analysis, which has been more completely dealt with by the author in the book Bayesian In ference with Geodetic Applications, Springer-Verlag, Berlin Heidelberg New York, 1990. Smaller additions and deletions have been incorporated, to im prove the text, to point out new developments or to eliminate errors which became apparent. A few examples have been also added. I thank Springer-Verlag for publishing this second edition and for the assistance in checking the translation, although the responsibility of errors remains with the author. I also want to express my thanks to Mrs. Ingrid Wahl and to Mrs. Heidemarlen Westhiiuser who prepared the second edition. Bonn, January 1999 Karl-Rudolf Koch Preface to the First Edition This book is a translation with slight modifications and additions of the second German edition of Parameter Estimation and Hypothesis Testing in Linear Models, published in 1987.

- Published

- 2013

27. Einführung in die Bayes-Statistik

- Author

-

Karl-Rudolf Koch and Karl-Rudolf Koch

- Subjects

- Environmental sciences, Physics, Computer vision, Geography, Geophysics, Statistics, Geographic information systems

- Abstract

Das Buch führt auf einfache und verständliche Weise in die Bayes-Statistik ein. Ausgehend vom Bayes-Theorem werden die Schätzung unbekannter Parameter, die Festlegung von Konfidenzregionen für die unbekannten Parameter und die Prüfung von Hypothesen für die Parameter abgeleitet. Angewendet werden die Verfahren für die Parameterschätzung im linearen Modell, für die Parameterschätzung, die sich robust gegenüber Ausreißern in den Beobachtungen verhält, für die Prädiktion und Filterung, die Varianz- und Kovarianzkomponentenschätzung und die Mustererkennung. Für Entscheidungen in Systemen mit Unsicherheiten dienen Bayes-Netze. Lassen sich notwendige Integrale analytisch nicht lösen, werden numerische Verfahren mit Hilfe von Zufallswerten eingesetzt.

- Published

- 2013

28. Gibbs sampler for computing and propagating large covariance matrices

- Author

-

Karl-Rudolf Koch, Brigitte Gundlich, and Jürgen Kusche

- Subjects

Estimation of covariance matrices ,Geophysics ,Covariance function ,Covariance mapping ,Geochemistry and Petrology ,Monte Carlo method ,Rational quadratic covariance function ,Monte Carlo integration ,Covariance intersection ,Statistical physics ,Computers in Earth Sciences ,Covariance ,Mathematics - Abstract

The use of sampling-based Monte Carlo methods for the computation and propagation of large covariance matrices in geodetic applications is investigated. In particular, the so-called Gibbs sampler, and its use in deriving covariance matrices by Monte Carlo integration, and in linear and nonlinear error propagation studies, is discussed. Modifications of this technique are given which improve in efficiency in situations where estimated parameters are highly correlated and normal matrices appear as ill-conditioned. This is a situation frequently encountered in satellite gravity field modelling. A synthetic experiment, where covariance matrices for spherical harmonic coefficients are estimated and propagated to geoid height covariance matrices, is described. In this case, the generated samples correspond to random realizations of errors of a gravity field model.

- Published

- 2003

- Full Text

- View/download PDF

29. Outlier detection by the EM algorithm for laser scanning in rectangular and polar coordinate systems

- Author

-

Boris Kargoll and Karl-Rudolf Koch

- Subjects

Mathematical optimization ,Laser scanning ,Modeling and Simulation ,Monte Carlo method ,Expectation–maximization algorithm ,Earth and Planetary Sciences (miscellaneous) ,Anomaly detection ,Mean-shift ,Polar coordinate system ,Engineering (miscellaneous) ,Algorithm ,Mathematics - Abstract

To visualize the surface of an object, laser scanners determine the rectangular coordinates of points of a grid on the surface of the object in a local coordinate system. Vertical angles, horizontal angles and distances of a polar coordinate system are measured with the scanning. Outliers generally occur as gross errors in the distances. It is therefore investigated here whether rectangular or polar coordinates are better suited for the detection of outliers. The parameters of a surface represented by a polynomial are estimated in the nonlinear Gauss Helmert (GH) model and in a linear model. Rectangular and polar coordinates are used, and it is shown that the results for both coordinate systems are identical. It turns out that the linear model is sufficient to estimate the parameters of the polynomial surface. Outliers are therefore identified in the linear model by the expectation maximization (EM) algorithm for the variance-inflation model and are confirmed by the EM algorithm for the mean-shift model. Again, rectangular and polar coordinates are used. The same outliers are identified in both coordinate systems.

- Published

- 2015

- Full Text

- View/download PDF

30. Taxonomic revision of genus Homodiaetus (Teleostei, Siluriformes, Trichomycteridae)

- Author

-

Walter Rudolf Koch

- Subjects

Homodiaetus anisitsi ,Stegophilinae ,Odontode ,Trichomycteridae ,Anatomy ,Biology ,biology.organism_classification ,Neotropical ,lcsh:Zoology ,Animal Science and Zoology ,Taxonomy (biology) ,lcsh:QL1-991 ,Homodiaetus ,Snout ,Taxonomy - Abstract

The genus Homodiaetus Eigenmann & Ward, 1907 is revised and four species are recognized. Its distribution is restricted to southeastern South America, from Uruguay to Paraguay river at west to the coastal drainages of Rio de Janeiro State, Brazil. Homodiaetus is currently distinguished from other genus of Stegophilinae by the combination of the following characters: origin of ventral-fin at midlength between the snout tip and the caudal-fin origin; opercle with three or more odontodes; and gill membranes confluent with the istmus. Homodiaetus anisitsi Eigenmann & Ward, 1907, is diagnosed by the caudal-fin with black middle rays, margin of upper and lower procurrent caudal-fin rays with dark stripes extending to the caudal-fin, and 3-6 opercular odontodes; H. passarellii (Ribeiro, 1944) with 6-7 opercular odontodes, 21-24 lower procurrent caudal-fin rays and 23-26 upper procurrent caudal-fin rays; H. banguela sp. nov. with 9 opercular odontodes, 17-19 lower procurrent caudal-fin rays, 17-22 upper procurrent caudal-fin rays, reduction of fourth pharyngobranchial with only three teeth and untoothed fifth ceratobranchial; and H. graciosa sp. nov. with 5-6 dentary rows, 7-9 opercular odontodes and 16-23 upper procurrent caudal-fin rays.

- Published

- 2002

31. Confidence regions for GPS baselines by Bayesian statistics

- Author

-

Karl-Rudolf Koch and Brigitte Gundlich

- Subjects

business.industry ,Computer science ,Monte Carlo method ,Geodetic datum ,Confidence interval ,Numerical integration ,Bayesian statistics ,Geophysics ,Integer ,Geochemistry and Petrology ,Statistics ,Global Positioning System ,Computers in Earth Sciences ,business ,Algorithm ,Confidence region - Abstract

The global positioning system (GPS) model is distinctive in the way that the unknown parameters are not only real-valued, the baseline coordinates, but also integers, the phase ambiguities. The GPS model therefore leads to a mixed integer–real-valued estimation problem. Common solutions are the float solution, which ignores the ambiguities being integers, or the fixed solution, where the ambiguities are estimated as integers and then are fixed. Confidence regions, so-called HPD (highest posterior density) regions, for the GPS baselines are derived by Bayesian statistics. They take care of the integer character of the phase ambiguities but still consider them as unknown parameters. Estimating these confidence regions leads to a numerical integration problem which is solved by Monte Carlo methods. This is computationally expensive so that approximations of the confidence regions are also developed. In an example it is shown that for a high confidence level the confidence region consists of more than one region.

- Published

- 2002

- Full Text

- View/download PDF

32. [Untitled]

- Author

-

Karl-Rudolf Koch and Robert Blinken

- Subjects

Surface (mathematics) ,Elevation ,Sea-surface height ,Geophysics ,Geodesy ,Physics::Geophysics ,EGM96 ,Ocean surface topography ,Geochemistry and Petrology ,Geoid ,Undulation of the geoid ,Altimeter ,Physics::Atmospheric and Oceanic Physics ,Geology - Abstract

A method for splitting sea surface height measurements from satellite altimetry into geoid undulations and sea surface topography is presented. The method is based on a combination of the information from altimeter data and a dynamic sea surface height model. The model consists of geoid undulations and a quasi-geostrophic model for expressing the sea surface topography. The goal is the estimation of those values of the parameters of the sea surface height model that provide a least-squares fit of the model to the data. The solution is accomplished by the adjoint method which makes use of the adjoint model for computing the gradient of the cost function of the least-squares adjustment and an optimization algorithm for obtaining improved parameters. The estimation is applied to the North Atlantic. ERS-1 altimeter data of the year 1993 are used. The resulting geoid agrees well with the geoid of the EGM96 gravity model.

- Published

- 2001

- Full Text

- View/download PDF

33. Comments on Xu et al. (2006) Variance component estimation in linear inverse ill-posed models, J Geod 80(1):69–81

- Author

-

Karl-Rudolf Koch, Jürgen Kusche, 1.2 Global Geomonitoring and Gravity Field, 1.0 Geodesy and Remote Sensing, Departments, GFZ Publication Database, Deutsches GeoForschungsZentrum, and Gravity Field and Gravimetry -2009, Geoengineering Centres, GFZ Publication Database, Deutsches GeoForschungsZentrum

- Subjects

Well-posed problem ,Estimation ,Geophysics ,Geochemistry and Petrology ,Econometrics ,Inverse ,Variance components ,550 - Earth sciences ,Computers in Earth Sciences ,Mathematics - Published

- 2007

- Full Text

- View/download PDF

34. Robust Kalman filter for rank deficient observation models

- Author

-

Yuanxi Yang and Karl-Rudolf Koch

- Subjects

ComputingMethodologies_IMAGEPROCESSINGANDCOMPUTERVISION ,Robust statistics ,Kalman filter ,Invariant extended Kalman filter ,Bayesian statistics ,Extended Kalman filter ,ComputingMethodologies_PATTERNRECOGNITION ,Geophysics ,Geochemistry and Petrology ,Control theory ,Fast Kalman filter ,Ensemble Kalman filter ,Computers in Earth Sciences ,Algorithm ,Alpha beta filter ,Mathematics - Abstract

A robust Kalman filter is derived for rank deficient observation models. The datum for the Kalman filter is introduced at the zero epoch by the choice of a generalized inverse. The robust filter is obtained by Bayesian statistics and by applying a robust M-estimate. Outliers are not only looked for in the observations but also in the updated parameters. The ability of the robust Kalman filter to detect outliers is demonstrated by an example.

- Published

- 1998

- Full Text

- View/download PDF

35. Outlier Detection for the Nonlinear Gauss Helmert Model With Variance Components by the Expectation Maximization Algorithm

- Author

-

Karl-Rudolf Koch

- Subjects

Nonlinear system ,Mathematical optimization ,Laser scanning ,Modeling and Simulation ,Gauss ,Expectation–maximization algorithm ,Earth and Planetary Sciences (miscellaneous) ,Variance components ,Anomaly detection ,Engineering (miscellaneous) ,Algorithm ,Mathematics - Abstract

Best invariant quadratic unbiased estimates (BIQUE) of the variance and covariance components for a nonlinear Gauss Helmert (GH) model are derived. To detect outliers, the expectation maximization (EM) algorithm based on the variance-inflation model and the mean-shift model is applied, which results in an iterative reweighting least squares. Each step of the iterations for the EM algorithm therefore includes first the iterations for linearizing the GH model and then the iterations for estimating the variance components. The method is applied to fit a surface in three-dimensional space to the three coordinates of points measured, for instance, by a laser scanner. The surface is represented by a polynomial of second degree and the variance components of the three coordinates are estimated. Outliers are detected by the EM algorithm based on the variance-inflation model and identified by the EM algorithm for the mean-shift model.

- Published

- 2014

- Full Text

- View/download PDF

36. Simple Layer Model of the Geopotential in Satellite Geodesy

- Author

-

Karl-Rudolf Koch

- Subjects

Geopotential ,Geography ,Satellite geodesy ,Earth ellipsoid ,Theoretical gravity ,Vertical deflection ,European Combined Geodetic Network ,Physical geodesy ,Geophysics ,Geodesy ,Gravity anomaly - Published

- 2013

- Full Text

- View/download PDF

37. Expectation maximization algorithm for the variance-inflation model by applying the t-distribution

- Author

-

Karl-Rudolf Koch and Boris Kargoll

- Subjects

Variance inflation factor ,Heavy-tailed distribution ,Modeling and Simulation ,Statistics ,Expectation–maximization algorithm ,Earth and Planetary Sciences (miscellaneous) ,Econometrics ,T distribution ,Engineering (miscellaneous) ,Mathematics - Published

- 2013

- Full Text

- View/download PDF

38. Comparison of two robust estimations by expectation maximization algorithms with Huber’s method and outlier tests

- Author

-

Karl-Rudolf Koch

- Subjects

Computer science ,business.industry ,Modeling and Simulation ,Monte Carlo method ,Outlier ,Expectation–maximization algorithm ,Earth and Planetary Sciences (miscellaneous) ,Pattern recognition ,Artificial intelligence ,Mean-shift ,business ,Engineering (miscellaneous) ,Algorithm - Published

- 2013

- Full Text

- View/download PDF

39. The influence of different osteosynthesis configurations with locking compression plates (LCP) on stability and fracture healing after an oblique 45° angle osteotomy

- Author

-

Michael Plecko, Rudolf Koch, Stephen J. Ferguson, Michèle Sidler, Alexander Bürki, Philippe Gédet, Jörg A Auer, Ulrich Stoeckle, Daniel Andermatt, Katja Nuss, Robert Frigg, Birthe Pegel, Peter W Kronen, Nico Lagerpusch, Brigitte von Rechenberg, Karina Klein, University of Zurich, and von Rechenberg, Brigitte

- Subjects

medicine.medical_specialty ,medicine.medical_treatment ,Bone Screws ,610 Medicine & health ,Bone healing ,Osteotomy ,Weight-Bearing ,Fracture Fixation, Internal ,2732 Orthopedics and Sports Medicine ,medicine ,Animals ,Simple fracture ,Tibia ,General Environmental Science ,Fracture Healing ,Orthodontics ,Sheep ,Osteosynthesis ,business.industry ,Torsion (mechanics) ,Oblique case ,Stiffness ,Equipment Design ,Biomechanical Phenomena ,Surgery ,Tibial Fractures ,10021 Department of Trauma Surgery ,General Earth and Planetary Sciences ,570 Life sciences ,biology ,Female ,10090 Equine Department ,medicine.symptom ,business ,2711 Emergency Medicine ,Bone Plates - Abstract

Background Locking compression plates are used in various configurations with lack of detailed information on consequent bone healing. Study design In this in vivo study in sheep 5 different applications of locking compression plate (LCP) were tested using a 45° oblique osteotomy simulating simple fracture pattern. 60 Swiss Alpine sheep where assigned to 5 different groups with 12 sheep each (Group 1: interfragmentary lag screw and an LCP fixed with standard cortex screws as neutralisation plate; Group 2: interfragmentary lag screw and LCP with locking head screws; Group 3: compression plate technique (hybrid construct); Group 4: internal fixator without fracture gap; Group 5: internal fixator with 3 mm gap at the osteotomy site). One half of each group (6 sheep) was monitored for 6 weeks, and the other half (6 sheep) where followed for 12 weeks. Methods X-rays at 3, 6, 9 and 12 weeks were performed to monitor the healing process. After sacrifice operated tibiae were tested biomechanically for nondestructive torsion and compared to the tibia of the healthy opposite side. After testing specimens were processed for microradiography, histology, histomorphometry and assessment of calcium deposition by fluorescence microscopy. Results In all groups bone healing occurred without complications. Stiffness in biomechanical testing showed a tendency for higher values in G2 but results were not statistically significant. Values for G5 were significantly lower after 6 weeks, but after 12 weeks values had improved to comparable results. For all groups, except G3, stiffness values improved between 6 and 12 weeks. Histomorphometrical data demonstrate endosteal callus to be more marked in G2 at 6 weeks. Discussion and conclusion All five configurations resulted in undisturbed bone healing and are considered safe for clinical application.

- Published

- 2012

40. The dynamisation of locking plate osteosynthesis by means of dynamic locking screws (DLS)-an experimental study in sheep

- Author

-

Robert Frigg, Rudolf Koch, Jörg A Auer, Stephen J. Ferguson, Michèle Sidler, Alexander Bürki, Michael Plecko, Katja Nuss, Brigitte von Rechenberg, Peter W Kronen, Daniel Andermatt, Nico Lagerpusch, Ulrich Stoeckle, Karina Klein, University of Zurich, and von Rechenberg, Brigitte

- Subjects

Callus formation ,medicine.medical_treatment ,Bone Screws ,Human bone ,610 Medicine & health ,Bone healing ,Osteotomy ,Locking plate ,Fracture Fixation, Internal ,2732 Orthopedics and Sports Medicine ,Osteogenesis ,medicine ,Animals ,Tibia ,Bony Callus ,General Environmental Science ,Fracture Healing ,Osteosynthesis ,Sheep ,business.industry ,Anatomy ,Compression (physics) ,10021 Department of Trauma Surgery ,General Earth and Planetary Sciences ,Female ,10090 Equine Department ,2711 Emergency Medicine ,business ,Bone Plates ,Biomedical engineering - Abstract

In this in vivo study a new generation of locking screws was tested. The design of the dynamic locking screw (DLS) enables the dynamisation of the cortex underneath the plate (cis-cortex) and, therefore, allows almost parallel interfragmentary closure of the fracture gap. A 45° angle osteotomy was performed unilaterally on the tibia of 37 sheep. Groups of 12 sheep were formed and in each group a different osteotomy gap (0, 1 and 3mm) was fixed using a locking compression plate (LCP) in combination with the DLS. The healing process was monitored radiographically every 3 weeks for 6, respectively 12 weeks. After this time the sheep were sacrificed, the bones harvested and the implants removed. The isolated bones were evaluated in the micro-computed tomography unit, tested biomechanically and evaluated histologically. The best results of interfragmentary movement (IFM) were shown in the 0mm configuration. The bones of this group demonstrated histomorphometrically the most distinct callus formation on the cis-cortex and the highest torsional stiffness relative to the untreated limb at 12 weeks after surgery. This animal study showed that IFM stimulated the synthesis of new bone matrix, especially underneath the plate and thus, could solve a current limitation in normal human bone healing. The DLS will be a valuable addition to the locking screw technology and improve fracture healing.

- Published

- 2011

41. Assessment techniques for measurement of target erosion/ redeposition in large tokamaks

- Author

-

F. Weschenfelder, R. Behrisch, Albert Rudolf Koch, J.P. Coad, L. De Kock, R. Wilhelm, and P. Wienhold

- Subjects

Nuclear and High Energy Physics ,Jet (fluid) ,Tokamak ,Materials science ,business.industry ,Divertor ,Nanotechnology ,Fusion power ,Erosion (morphology) ,law.invention ,Optics ,Nuclear Energy and Engineering ,law ,Deposition (phase transition) ,General Materials Science ,Speckle imaging ,Sensitivity (control systems) ,business - Abstract

Two possible techniques for the measurement of erosion/redeposition in the new JET divertor have been assessed; colour fringe analysis (CFA) and speckle interferometry. CFA is a simple technique in which the target is viewed with a colour camera, and can study optically transparent deposited films up to 1 μm in thickness. Speckle interferometry is a powerful technique for following changes in surface topology, but its sensitivity of >1 μm makes it more ideally suited to the next generation of tokamaks.

- Published

- 1993

- Full Text

- View/download PDF

42. Uncertainty of NURBS surface fit by Monte Carlo simulations

- Author

-

Karl-Rudolf Koch

- Subjects

Surface (mathematics) ,Hybrid Monte Carlo ,Computer science ,Modeling and Simulation ,Monte Carlo method ,Earth and Planetary Sciences (miscellaneous) ,Dynamic Monte Carlo method ,Monte Carlo method in statistical physics ,Kinetic Monte Carlo ,Statistical physics ,Direct simulation Monte Carlo ,Engineering (miscellaneous) ,Monte Carlo molecular modeling - Published

- 2009

- Full Text

- View/download PDF

43. SE1 Green Electronics: Environmental Impacts, Power, E-Waste

- Author

-

Rudolf Koch and Jan Sevenhans

- Subjects

Engineering ,Ambient intelligence ,business.industry ,Electronic packaging ,Green electronics ,Electronics ,business ,Combustion ,Electronic waste ,Automotive engineering ,Power (physics) - Published

- 2008

- Full Text

- View/download PDF

44. Determining uncertainties of correlated measurements by Monte Carlo simulations applied to laserscanning

- Author

-

Karl-Rudolf Koch

- Subjects

Hybrid Monte Carlo ,Modeling and Simulation ,Monte Carlo method ,Earth and Planetary Sciences (miscellaneous) ,Dynamic Monte Carlo method ,Credible interval ,Monte Carlo method in statistical physics ,Kinetic Monte Carlo ,Statistical physics ,Engineering (miscellaneous) ,Monte Carlo molecular modeling ,Mathematics - Published

- 2008

- Full Text

- View/download PDF

45. Evaluation of uncertainties in measurements by Monte Carlo simulations with an application for laserscanning

- Author

-

Karl-Rudolf Koch

- Subjects

Bayesian statistics ,Hybrid Monte Carlo ,Modeling and Simulation ,Monte Carlo method ,Earth and Planetary Sciences (miscellaneous) ,Dynamic Monte Carlo method ,Monte Carlo method in statistical physics ,Statistical physics ,Kinetic Monte Carlo ,Engineering (miscellaneous) ,Mathematics ,Monte Carlo molecular modeling - Published

- 2008

- Full Text

- View/download PDF

46. A Fully Integrated SoC for GSM/GPRS in 0.13/spl mu/m CMOS

- Author

-

M. Hammes, E. Labarre, Jens Kissing, P.-H. Bonnaud, Rudolf Koch, Andre Hanke, and C. Schwoerer

- Subjects

Engineering ,Digital signal processor ,CMOS ,business.industry ,GSM ,Embedded system ,Spectral mask ,System on a chip ,General Packet Radio Service ,business ,Sensitivity (electronics) ,Digital signal processing ,Computer hardware - Abstract

A single-chip radio for quad-band GSM/GPRS applications integrates the RF, analog/mixed-signal blocks, DSP, application processor, RAM/ROM, and audio. It is implemented in a 0.13mum CMOS process. The RX achieves -112.5dBm/-110.5dBm sensitivity and the TX meets all the spectral mask requirements while using a 1.5V supply

- Published

- 2006

- Full Text

- View/download PDF

47. Foundations of Bayesian Statistics

- Author

-

Karl-Rudolf Koch

- Subjects

Bayesian statistics ,Statement (computer science) ,Bayesian econometrics ,Econometrics ,Geodetic datum ,Bayesian network ,Measure (mathematics) ,Random variable ,Statistics::Computation ,Confidence region ,Mathematics - Abstract

In three basic points Bayesian statistics differs from traditional statistics. First Bayesian statistics is founded on Bayes’theorem. By this theorem unknown parameters are estimated, confidence regions for the unknown parameters are established and hypotheses for the parameters are tested. Furthermore, Bayesian statistics extends the notion of probability by defining the probability for statements or propositions. The probability is a measure for the plausibility of a statement. Finally, the unknown parameters of Bayesian statistics are random variables. But nevertheless, the unknown parameters can represent constants. There are numerous applications of Bayesian statistics for the analysis of geodetic data, some of them are pointed out.

- Published

- 2003

- Full Text

- View/download PDF

48. Regularization of geopotential determination from satellite data by variance components

- Author

-

Jürgen Kusche and Karl-Rudolf Koch

- Subjects

Geopotential ,business.industry ,Linear system ,Estimator ,Bayesian inference ,Geodesy ,Gradiometer ,Physics::Geophysics ,Weighting ,Matrix (mathematics) ,Geophysics ,Geochemistry and Petrology ,Physics::Space Physics ,Global Positioning System ,Computers in Earth Sciences ,business ,Algorithm ,Mathematics - Abstract

Different types of present or future satellite data have to be combined by applying appropriate weighting for the determination of the gravity field of the Earth, for instance GPS observations for CHAMP with satellite to satellite tracking for the coming mission GRACE as well as gradiometer measurements for GOCE. In addition, the estimate of the geopotential has to be smoothed or regularized because of the inversion problem. It is proposed to solve these two tasks by Bayesian inference on variance components. The estimates of the variance components are computed by a stochastic estimator of the traces of matrices connected with the inverse of the matrix of normal equations, thus leading to a new method for determining variance components for large linear systems. The posterior density function for the variance components, weighting factors and regularization parameters are given in order to compute the confidence intervals for these quantities. Test computations with simulated gradiometer observations for GOCE and satellite to satellite tracking for GRACE show the validity of the approach.

- Published

- 2002

49. Numerische Verfahren

- Author

-

Karl-Rudolf Koch

- Published

- 2000

- Full Text

- View/download PDF

50. Einführung in die Bayes-Statistik

- Author

-

Karl-Rudolf Koch

- Subjects

Computer science - Published

- 2000

- Full Text

- View/download PDF

Catalog

Discovery Service for Jio Institute Digital Library

For full access to our library's resources, please sign in.